Facing massive data growth, productivity app Notion has ditched its traditional data pipeline for a custom-built data lake. This new architecture allows Notion to process and analyze its user data faster and at a lower cost, enabling the addition of new features like AI-powered functionality.

Notion's user base has grown exponentially, leading to a doubling of user data every 6-12 months. This surge placed a strain on their existing data pipeline, which relied on a combination of Postgres, Fivetran, and Snowflake. The key challenges included:

- Maintaining Hundreds of Connectors:Managing 480 Fivetran connectors for each Postgres shard proved burdensome for Notion's engineers.

- Data Freshness:The existing setup resulted in slow and expensive data ingestion to Snowflake, which is optimized for insert-heavy workloads rather than Notion's update-heavy environment.

- Limited Transformation Capabilities:Implementing complex data transformations became difficult with the standard SQL interface offered by off-the-shelf data warehouses.

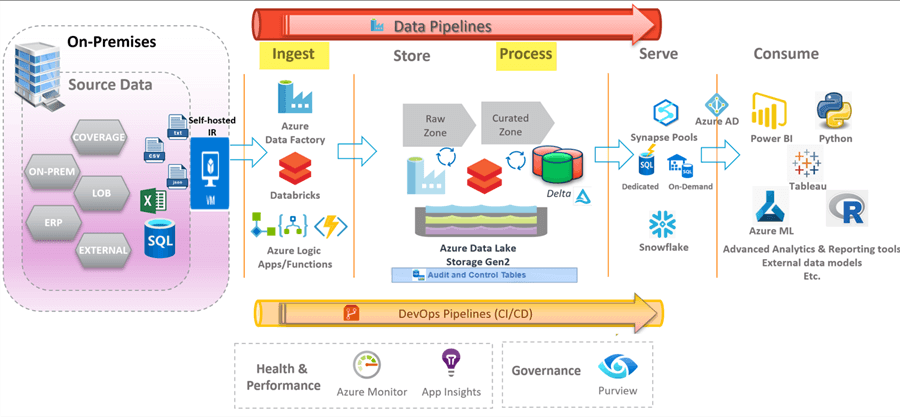

To address these issues, Notion built its own data lake leveraging open-source technologies like Debezium, Apache Spark, Apache Hudi, and AWS S3.

Here's a breakdown of the new architecture:

- Debezium:This tool captures data changes in real-time from Notion's Postgres databases and transmits them to Apache Kafka.

- Apache Spark:Spark processes and transforms the data received from Kafka.

- Apache Hudi:This framework enables efficient updates to the data lake stored in AWS S3, ensuring data consistency and allowing for rollbacks if necessary.

This new in-house data lake offers Notion significant advantages:

- Faster Data Processing:Ingestion times have been reduced from over a day to mere minutes or hours.

- Cost Savings:Notion has achieved millions of dollars in cost savings through this new approach.

- Improved Scalability:The data lake architecture is designed to handle Notion's ever-growing data volume.

- Foundation for AI Features:The faster data processing allows Notion to integrate new features that rely on machine learning.

Notion still utilizes Snowflake and Fivetran for specific use cases where they are well-suited. However, the custom data lake provides Notion with the flexibility and scalability required to handle its massive and ever-growing data landscape.